Framework for a Robust Data Pipeline

I work with data in the social sector quite often. As the ‘principal data guy’ at work, I have identified the following stages of the data pipeline –

- Data Collection

- Data Digitisation & Cleaning

- Data Analysis

- Report Generation

- Miscellaneous (Dashboards or MIS)

In this article, I am going to share a framework that I use myself to plan data-related projects. This framework ensures that you get the data that you need, as cleanly as possible, with as little PITA1 as possible.

Under each of the stages I list the steps involved in a largely sequential order. This is followed by some general comments from my experience and an estimate of the time the stage would typically take, again based on my experience.

Suggestion: A while ago I wrote an article called Lessons from Designing a Data Collection System in India that WORKS where I highlighted some specific examples on how to work with data. I think these two articles complement each other well.

Let’s go.

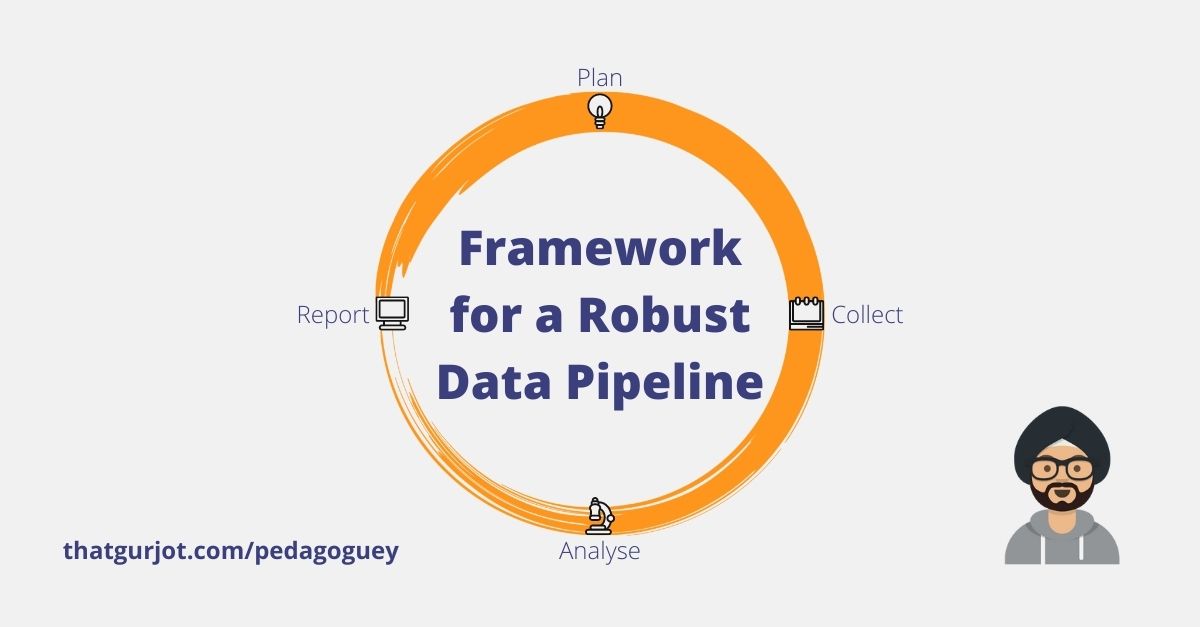

The Circle of Life

Plan. Collect. Analyse. Report.

That’s the order. If you mess it up you’ll either end up with crap 💩 or carp 🐠. And you don’t want that.

1. Data Collection

- Confirming the objectives of the data collection exercise

- Deciding who will fill the form and how many times will it be filled

- Preparing first list of questions to be included in the data capture form

- Calculating the total time taken by each surveyor to fill the form

- Reducing the time and effort taken to fill the form by removing superfluous questions that do not provide relevant information, or by merging questions to get multiple data points from a single question

- Modifying the UX of the form to pre-populate and standardize the input, and minimizing text input and implementing checks for data validity

- Reevaluating the modified form for internal coherence and edge cases

- Implementing the form for data collection

- Providing support to surveyors during pilot phase or first few days of implementation to account for modifications because of missed edge cases

Comments

The more time you spend on this stage of the process, the lesser time you’ll have to spend pulling your hair out later. Think of the second-order consequences, imagine it from the perspective of the surveyors and the respondents, and figure out the potential bottlenecks (technological and systemic) or edge cases before they occur. It’s worth it.

Time frame: Ideally 5-7 days of planning and reiteration followed by however many days of data collection.

2. Data Digitisation & Cleaning

- Converting hand-filled forms into digital data

- Cleaning the collected data: removing any discrepancies, incomplete or incorrect submissions; ensuring uniformity among data fields

- Checking for missing data and getting that filled or corroborating from other sources if need be

Comments

If the objectives of the data collection exercise are clear and enough time was spent planning the data capture formats, this should be a fairly easy task. Otherwise it’ll be like toasting marshmallows in hellfire. This is largely grunt work so it’s best done by somebody else. But keep in mind that there are a number of small decisions related to the analysis that have to be taken at various steps along the way when cleaning data. So don’t just delegate it to the intern, ensure that everyone is on the same page first. If the data is of critical importance, do it yourself.

Time frame: Anywhere between 2-5 days depending on the size of the data and how messed up it is.

3. Data Analysis

- Preliminary analysis to understand the nature of the data and identify potential relationships between data points or analysis metrics

- Identifying the key performance indicators (KPIs) and objectives for which the analysis is to be conducted (done in partnership with all stakeholders)

- Converting the cleaned data into necessary formats for the purpose of the analysis

- Analysing the data (choice of analysis software depends on the objectives, often Excel is a great place to start investigating the data and then moving on to R, Python or Stata for the actual number crunching)

- Visualising the data for gathering newer insights

- Revisiting analysis process in case of a change in objectives

Comments

Each data set will be analysed differently but keep in mind that you are not falling into the traps of statistical loopholes and cognitive biases.

Time frame: If the data is clean and the objectives are clear, this should be a breeze and be done in under a week. Otherwise there’ll be a ton of back and forth involved and the whole thing could take over a month.

4. Report Generation

- Collating necessary insights and visualisations from the analysis that support the objectives of the report

- Generating the final version of the graphics to keep a consistent design scheme

- Preparing a draft and circulating among all stakeholders for comments

- Updating the draft as per suggestions

- Preparing a presentation about the report if necessary

Comments

You might have to revisit your analysis software to generate graphs and conduct investigations that you previously might have missed. It’s only natural. Things become a lot more clearer when you start writing them down.

Time frame: Depending on the expected depth of analysis and the clarity of the framework and objectives, this should be done in 5-7 days. However, as the clarity goes down, the back and forth required goes up and so do the PITA levels.

5. Miscellaneous (Dashboards or MIS)

- If the data is collected on a fixed timeline (monthly, quarterly, yearly), it would be best to keep the data fields consistent in order to ease the collection process as well as allow historical correlation

- If the data is being collected on a regular basis, it would be useful to have a dashboard to display latest and historical data along with a timeseries analysis; preparation of such a dashboard is a separate task entirely and cannot simply be an extension of the usual analysis

Comments

A dashboard is usually contested ground in consultancy work because the clients usually have a preexisting platform (built with some obscure PHP library usually). They would then either roadblock the creation of a fresh dashboard or would want it to be integrated with the old one. This tends to get very subjective and arriving at a compromise often becomes necessary. Also, it goes without saying that if creating a dashboard is the ultimate objective of the data collection exercise, there will be significant modifications to the framework. Perhaps I’ll write an article about it later.

Time frame: Dashboard preparation can take as little as 5 days and up to 30 days depending on how the data is being provided, what features are desired and how it is to be hosted.

End notes

My work deals largely with the government school system in India. The framework I presented here could possibly help with other systems as well. I hope it helps you!

Did I miss out anything? Hit me up on Twitter or send me an email. Links are at the bottom of this page.

Keep up with the Pedagoguey!

Check out @pedagoguey on Instagram – that is where I post bite-sized versions of these blog posts, because sometimes reading a tweet thread is all you have the energy for.

You can read all the Pedagoguey blog posts here: pedagoguey.

-

pain in the ass ↩︎